Moral Tester

How might we align aritificial intelligence’s decision algorithms with human values? MIT's Moral Machine Reimagined for All

Timeline

February 2020 - April 2020

Role / Context

Product Designer / Team Project with Jungmoo Song

Leveraged Skills

Digital product design, UX/UI design

PROBLEM

Ariticial intelligence's decision algorithm and human aren't aligned. In extreme situations, how should AI make value decisions?

AI can't think nor understand like humans do, and this addresses a question - in inevitable situations like trolley problem, what should AI do? Who should be responsible for AI's decision? This sparked a question - how can we align human values with AI's decision algorithms?

"We need society to come together to decide what tradeoffs we are comfortable with."

Iyad Rahwan, Associate Professor at MIT Media Lab

SOLUTION

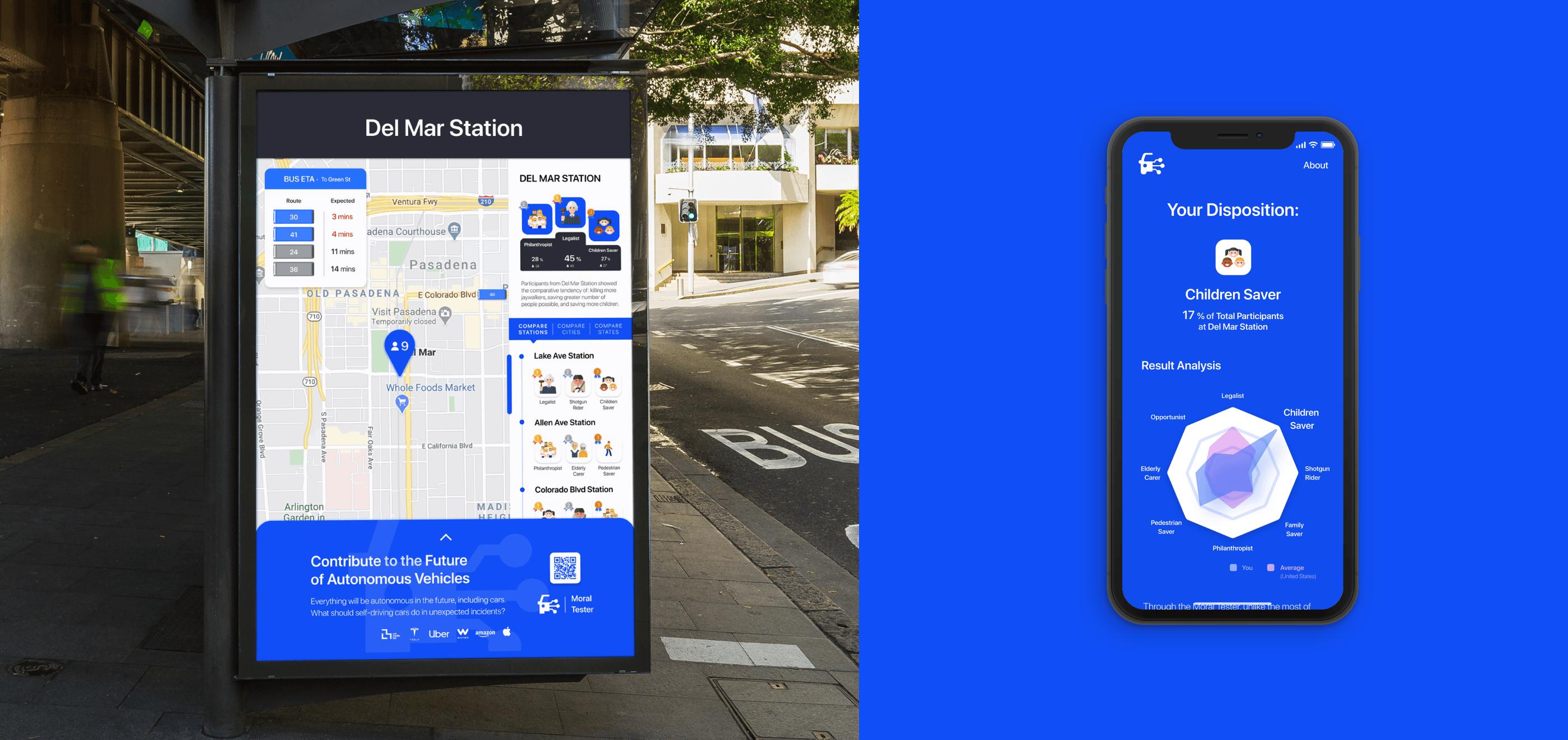

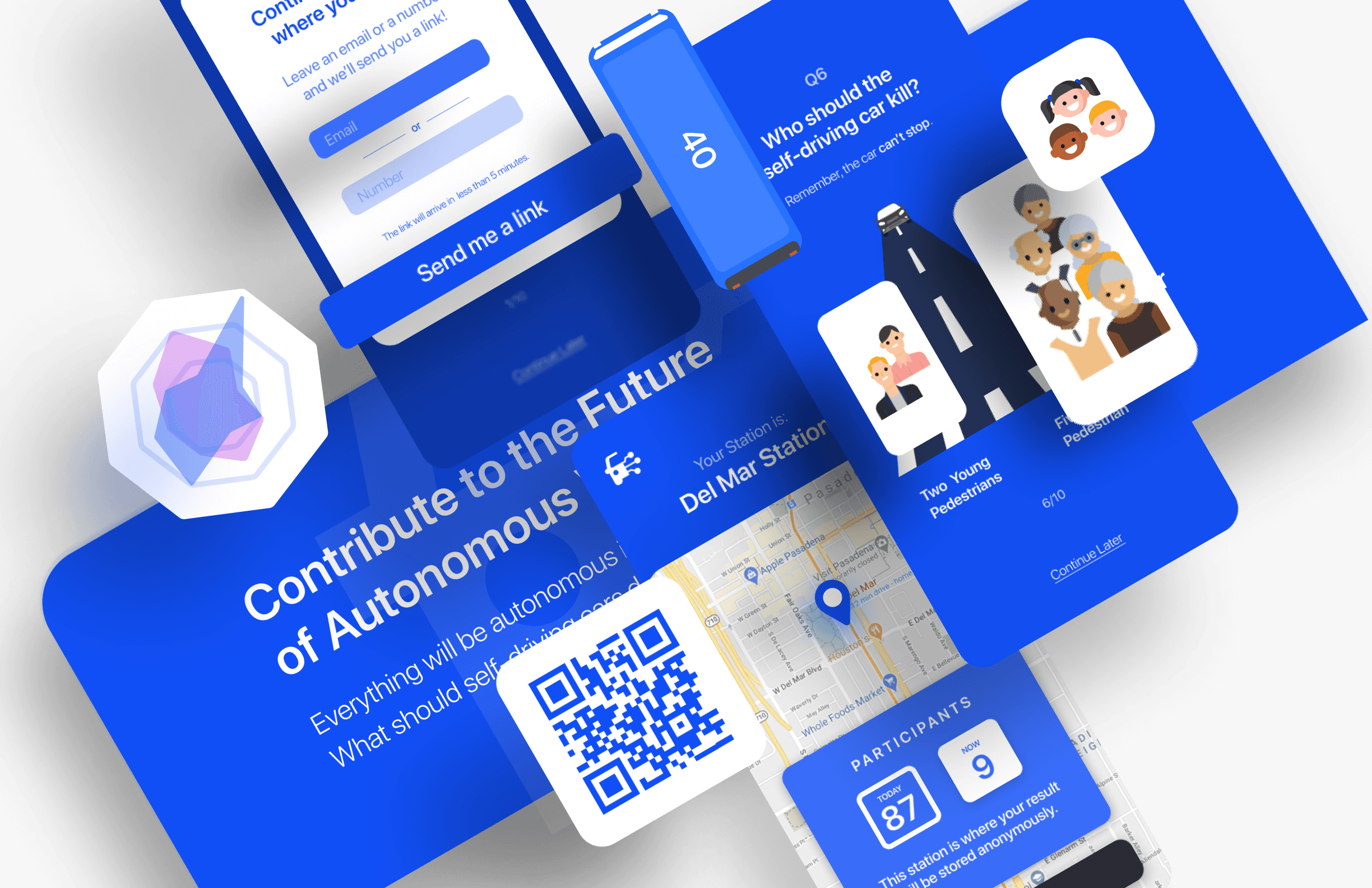

Redesigning the wheel, not remaking it

While researching on this topic, I came across Moral Machine by MIT, which already addresses issue. My teammate and I decided to reimagine this existing project to address this issue to make it more effective.

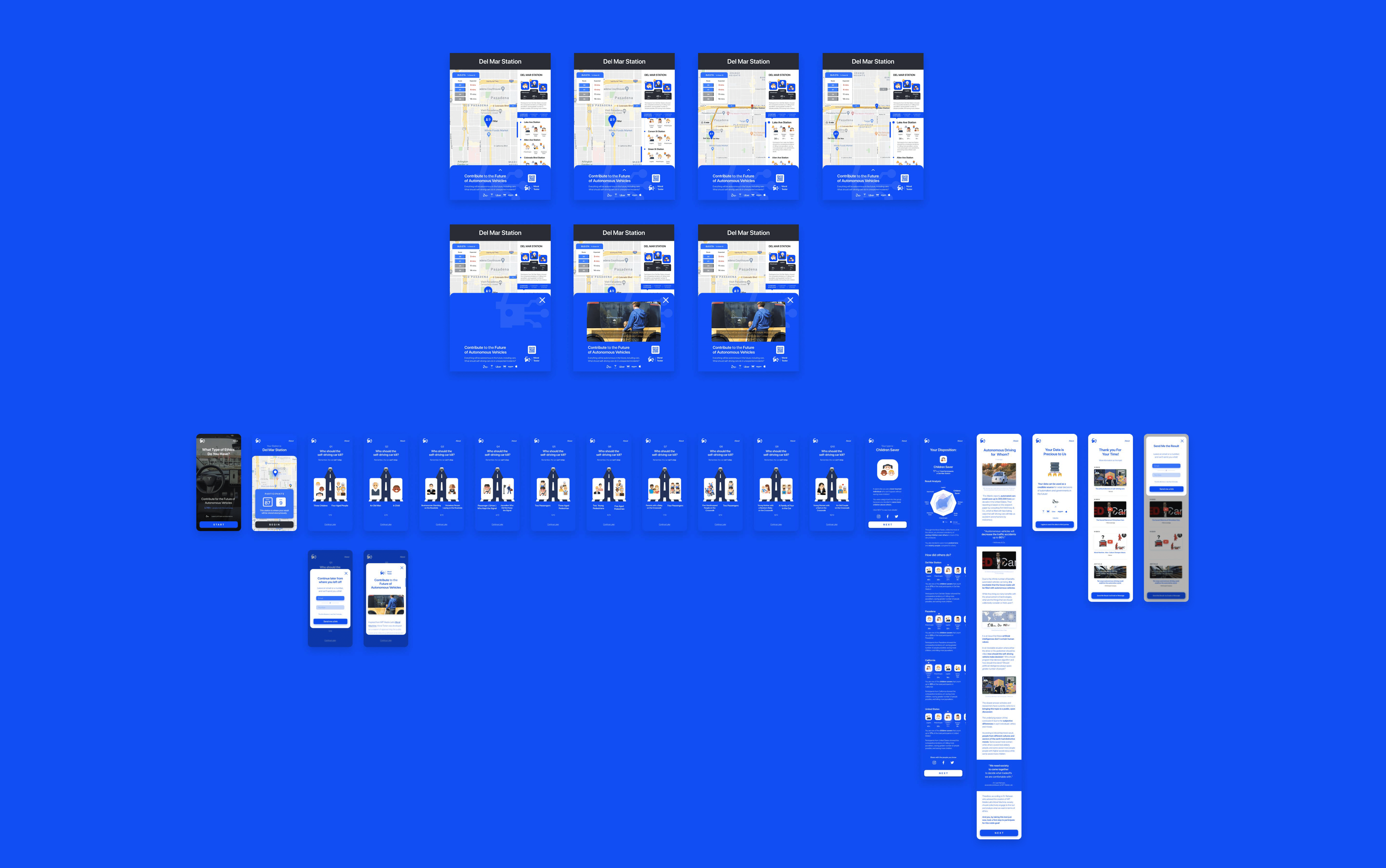

Moral Dilemma Simplified

Simple, intuitive process of questions within the moral dilemma

Categorizing Users based on their disposition

People are naturally drawn to find out their own dispositions

Using Public Screens as Entrance Points

Approaching wider public by utilizing public screens and displays

REFLECTIONS

💭 What I’d do differently next time...

Strive to make the entrance moment magical

Since this was a quick academic design project that encourages students to briefly explore the ethics aspect of AI, I couldn't spend a lot of time on perfecting the early stage of the experience.

If I were to continue further on this project, I think I'd strive to make the entrance and onboarding moment more captivating to lower the bounce rate.